Twitter is formally rolling out a new reply feature meant to curb “harmful or offensive” language by making the user aware that what they’re saying isn’t very nice.

This feature is an extension of the “do you want to read this article before retweeting it” prompt Twitter recently added as part of the company’s overall “healthy conversation” effort.

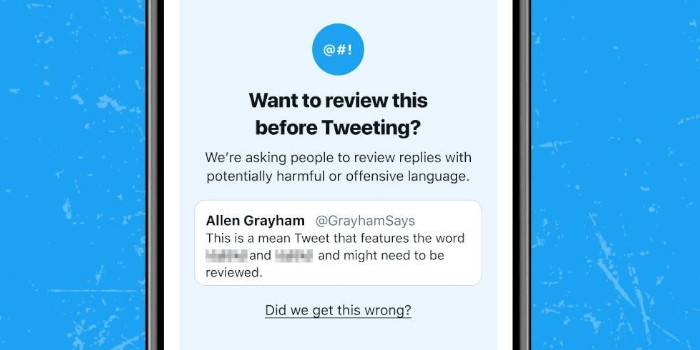

This new “self-censor” feature uses algorithms to detect harmful or offensive language and uses a prompt to ask the user to review their reply before posting, pointing out that their response could be considered hateful.

The company said the prompts will pop up on English-language Twitter accounts on Apple and Android devices from Wednesday.

The feature uses artificial intelligence (AI) to detect harmful language in a tweet a user has composed, the company said. Before the user presses the send button, an alert pops up on the screen, asking them to review what they’ve written in the post. The user can then edit, delete or send the reply.

Twitter has found that after receiving the prompt, 34% of people revisited their initial “hateful” reply or decided not to send the reply at all. 11% opted to use less “offensive” language.

Forsided, 07.05.2021